How to review processed docs in GDP (old)

Learn how to mark document outputs as correct and incorrect (as well as editing or relabeling any that are incorrect) within the super.AI platform.

The workflow steps for the different document types, such as invoice, GewA1, etc., vary and can be configured by users.

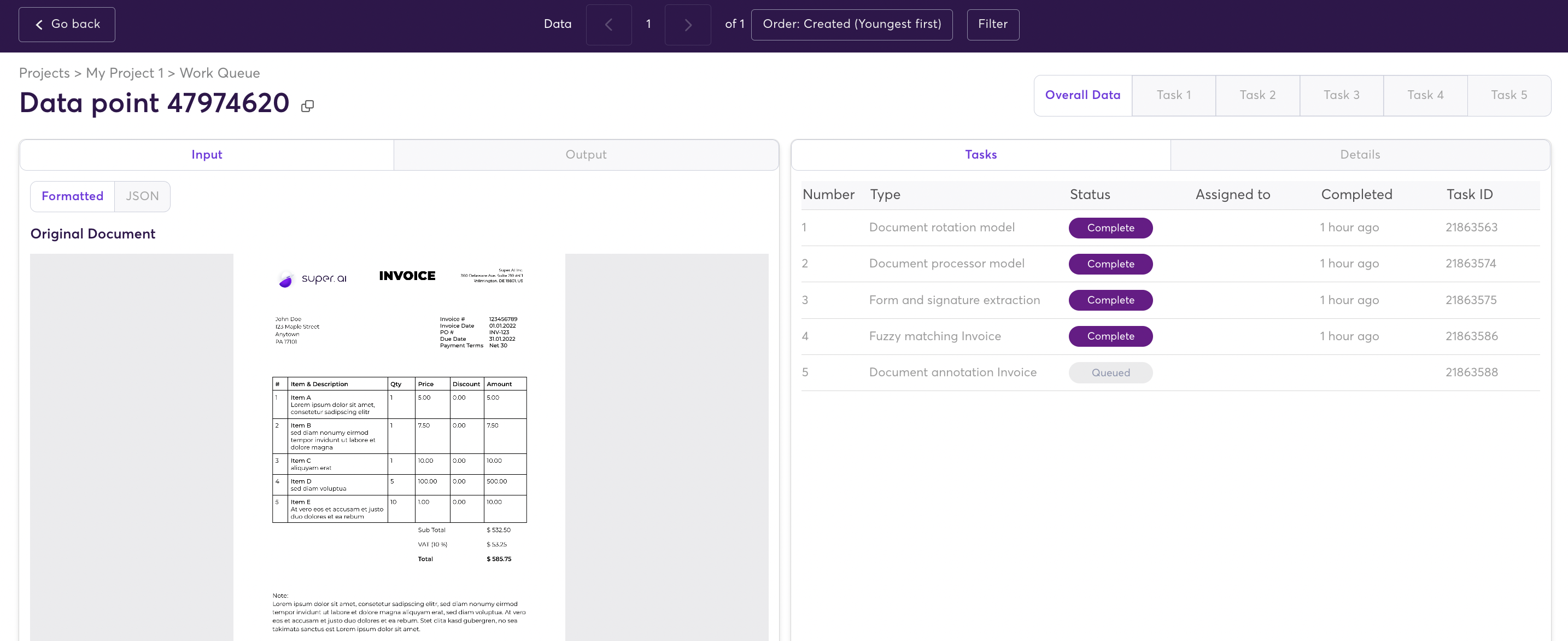

Below is a sample workflow for a document that includes five processing steps of which four are fully automated and one is a human in the loop (HIT) task - you can see the status of those tasks by clicking on the data point in the work queue:

- Document rotation model (fully automated)

- Document processor model (fully automated)

- Form and signature extraction (fully automated)

- Fuzzy matching (fully automated)

- Document annotation (human-in-the-loop task)

Overview of the tasks' status for each data point

Completion of all five tasks are required to change data point status to "completed"By default, all five tasks are required, meaning that the human-in-the-loop task cannot be skipped automatically and is required to be "completed" to change the datapoint/document status to "completed" and create downloadable outputs.

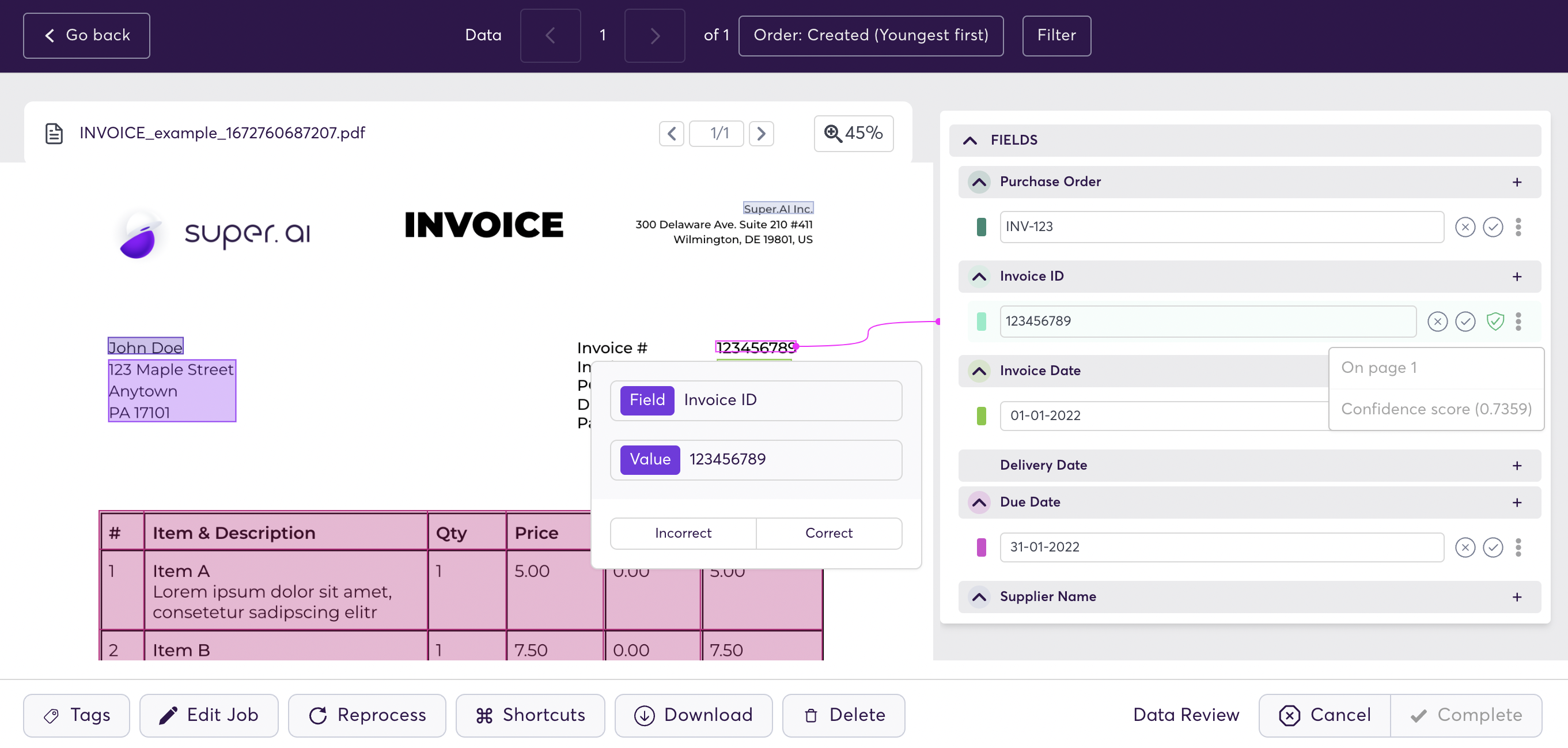

Document annotation (HITL) task

Click "Process myself" on the left side in order to review the processed documents. On the left side, you see the formatted document (with bounding boxes), and on the right side, you see the key-value pairs that have been automatically identified and classified.

Upon clicking the bounding boxes on the left side, you get directed to the key-value pair in the list. Upon clicking a value on the right side, you get directed to where this was found on the document.

By clicking "submit" you finish the HITL task and the data point/document status is "completed".

Review key-value pairs in the document annotation task and submit if correct, data point status will then be "completed"

Data points are assigned to specific usersOnce "process myself" is clicked, the data point/task is assigned to the user that clicked, and cannot be reviewed by another user. If you want to assign the data point/task to a different user, you have two options: Either you reprocess the data point and another user clicks "process myself" or you submit that data point and it can the annotated by another user subsequently.

Confidence scores

A confidence score, or classification threshold, indicates how confident the machine learning model is that the respective value has been correctly assigned to the respective key. The score can have a value between 0 (low) and 1 (high).

You can review the confidence scores on a field level by clicking the three dots next the value.

You can review the confidence scores on field level

Complete document annotation task

When clicking "submit", the document annotation task is completed.

Then, all five tasks are completed, the document's/data point's status is "completed" and extracted information can be downloaded in .csv or .json format.

After completion of the Document annotation task, the document's status is "completed"

Values (tables) need to have keys (table-keys) assigned to themValues of all data types (incl. tables) need to have keys assigned to be extracted. You might find a list of values in the "to be labeled" section; if those are not assigned to a key, they get lost after the task is submitted.

Updated 6 months ago

For a deep-dive into our labeling interface, please see the following How To guide