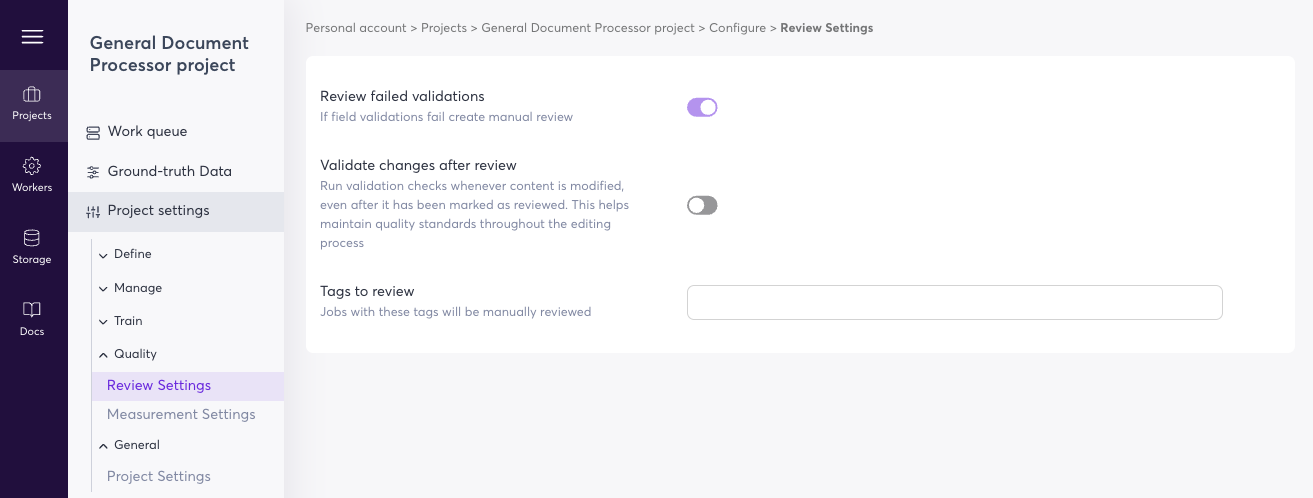

Review Settings

Review Settings are designed to assist you in identifying jobs that fall short of predefined quality standards, enabling you to take corrective action and ensure that your data meets the required level of accuracy and reliability.

Quality indicators to choose:

- Review failed validations

If validations fail create a manual review

→ If there is at least 1 validation rule for a field or table fails, that job will be automatically assigned the state correct after the time given in the delay\ - Validate changes after review

Run validation checks whenever content is modified, even after it has been marked as reviewed. This helps maintain quality standards throughout the editing process

→ If a job output is edited, that job will automatically move to post-processing and the validation rules run again. This means that, if at least 1 validation rule fails, the job reaches needs review state even though it has been reviewed before. - Tags to review

Fields or jobs with these tags will be manually reviewed

→ If a document has at least 1 of the tags assigned, that job will be automatically assigned the state needs review

Note: You can only choose existing tags and the tag must be present before a document gets (re)processed.

Coming soonWe are continously adding other quality metrics, those will include, but are not limited to, the following

- Confidence Review Thresholds

Choose thresholds to review based on the confidence score for all fields/tables of the Al

→ A job where at least 1 field or table confidence score is below the auto-reject boundary, will be automatically assigned the state needs review

→ A job where at least 1 field or table confidence score is above the auto-accept boundary, will be automatically assigned the state correct\

If you want to define specific mandatory fields that should never be empty, you want to use the field-level validation rules.

Updated 6 months ago